What is the crawl budget?

The amount of URLs on a website that are crawled and indexed by search engine crawlers over a specific time period is referred to as the crawl budget.

Each website is given a crawl budget by Google. Googlebot determines the frequency of crawling the amount of pages based on the crawl budget.

Why is the Crawl Budget limited?

The crawl budget has been set aside to ensure that the website does not get an excessive number of requests from crawlers for access to server resources, which could negatively impact the user experience and site performance.

How To determine Crawl Budget?

Check the crawl stats area of the Search Console account to calculate a website’s crawl budget.

The average amount of pages crawled every day is shown in the above-illustrated report. The crawled monthly budget is 371*30= 11,130.

Although this number is subject to change, it provides an approximation of the amount of pages that will be crawled in a particular period.

If you want to learn more about search engine bots, look at the server log file. It will tell you how websites are crawled and indexed.

How to Optimize Crawl Budget?

Crawling is required for indexation in order for a web page to appear in Google results. Check out this seven-point checklist to help you optimize your crawl budget for SEO.

Allow the crawling of important pages in Robot.txt File

Relevant pages and content should not be prohibited by the robot.txt file to ensure that they are crawlable. Block Google from crawling superfluous user login pages, certain files, or administrative portions of the website to make the most of your crawl budget. Using the Robot.txt file to prevent certain files and folders from being indexed. For huge websites, this is the most effective technique to freeze the crawl budget.

Avoid long redirect chains

If a website has more than 301-302 redirects, the search engine crawler will eventually cease crawling without indexing the crucial pages. There is a waste of crawl budget due to several redirects.

Redirects should be avoided as much as possible. Large websites, on the other hand, cannot function without a redirection chain. The best method is to ensure that no more than one redirect is used, and that it is only used when absolutely necessary.

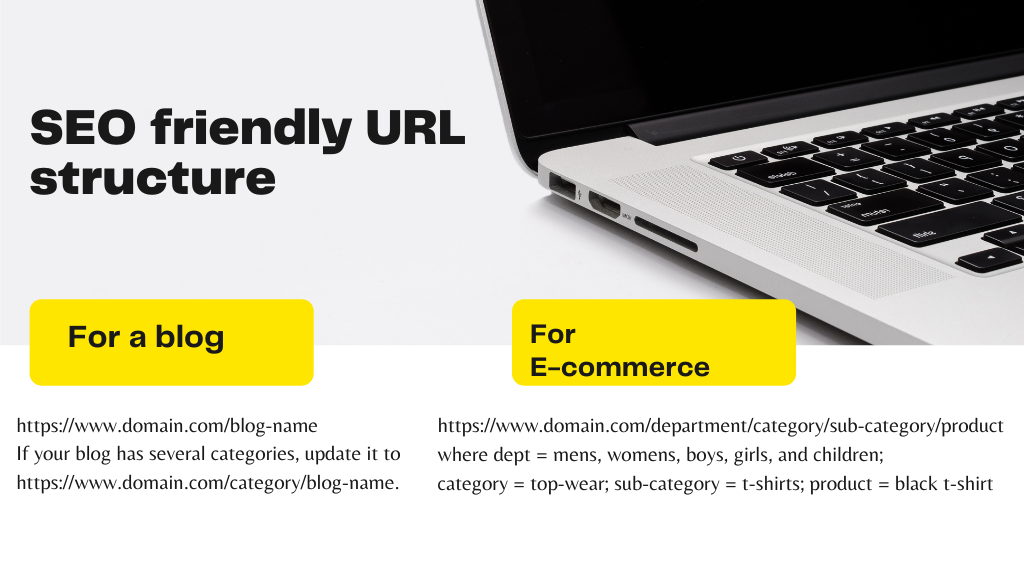

Managing URL Parameters

Using an infinite number of URL parameters, duplicate URL variations can be created from the same content. As a result, crawling redundant URL parameters wastes crawl budget, increases server load, and limits the ability to index SEO-relevant content.

It is recommended that you notify Google by adding URL parameter variations to your Google Search Console account under old tools and report area > URL Parameters to conserve the crawl budget.

Improve Site Speed

Improving site performance increases the likelihood of the Google bot crawling more pages. According to Google, a fast-loading site improves the user experience and increases the crawling rate.

To put it another way, a slow website wastes crawl budget. Pages will load rapidly if efforts are made to increase site speed and use advanced SEO strategies on websites, and Google bots will have ample time to crawl and visit more pages.

ALSO READ: 8 Tips for Creating SEO Friendly Website

Most top Ecommerce SEO firms have been attempting to establish a concrete SEO plan to drive more traffic and improve user experience to lower bounce rate by optimising page speed of Ecommerce websites.

Updating Sitemap

Only the most significant sites should be included in the XML Sitemap so that Google bots can visit and crawl the pages more frequently. As a result, it’s critical to keep the sitemap up to date, free of redirects and errors.

Fixing Http Errors

Broken links and server failures eat up crawl money in a technical sense. Please take a time to check for 404 and 503 issues on your website and fix them as quickly as possible.

To do so, go to the search console’s coverage report and see if Google has discovered any 404 problems. 404 pages can be redirected to any comparable or equal pages by downloading the whole list of URLs and then analysing them. If this is the case, then the broken 404 pages should be redirected to a new page.

In this instance, screaming frog and SE rating are recommended. Both of these website audit tools are excellent at covering technical SEO checklist items.

Internal Linking

Crawling URLs with a high number of internal links is always given priority by Google Bots. Internal links assist Google bots to locate various types of pages on a website that must be indexed in order for the site to appear in Google SERPs. One of the most essential SEO Trends for 2021 is internal links. This aids Google’s understanding of a website’s architecture, allowing it to explore and traverse the site with ease.

Conclusion

Crawling and indexing optimization is the same as website optimization. When performing SEO audit services, companies that provide SEO services in India usually consider the importance of crawl budget.

There is no need to be concerned about the crawl budget if the site is well-maintained or is very tiny. However, in some circumstances, such as with larger websites, freshly added pages, and a high number of redirection and errors, it’s necessary to pay attention to how to make the most of the crawl budget.

Monitoring the crawl rate on a regular basis might aid in determining whether there is a sudden surge or decline in crawl rate when analysing crawl statistic reports.